ShanghaiTech Automation and Robotics Center (STAR Center) of SIST has long focused on research and innovation in the fields of robotics and automation. Recently, some outstanding results were achieved and published in papers at top conferences and journals, including IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), and IEEE Robotics and Automation Letters (RA-L).

Advanced Sensing

Humans rely mainly on the eyes to obtain various forms of information from the outside world, while for robots, various sensors are used to respond to specific tasks and environments. Obtaining eternal information through sensors is the most basic and important part of robotics and automation.

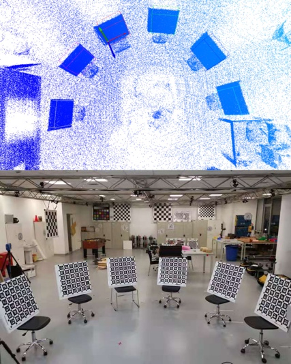

Event cameras have recently gained in popularity as they hold strong potential to complement regular cameras in situations of high dynamics or challenging illumination. They do not capture images using a shutter as regular cameras do, but rather, pixels inside event cameras report changes in brightness as they occur. Researchers from the Mobile Perception Lab (MPL) of the STAR center completed a set of benchmark datasets captured by a multi-sensor setup containing an event-based stereo camera, a regular stereo camera, multiple depth sensors, and an inertial measurement unit. This set of datasets is a novel benchmark that satisfies the requirements of Simultaneous Localization And Mapping (SLAM) problems. All sequences come with ground truth data captured by highly accurate external reference devices such as a motion capture system. Individual sequences include both small and large-scale environments, and cover the specific challenges targeted by dynamic vision sensors. The researchers published their results in a paper entitled “VECtor: A Versatile Event-Centric Benchmark for Multi-Sensor SLAM” in RA-L. Third-year Ph.D. candidate Gao Ling, MPL visiting student Liang Yuxuan, and first-year master student Yang Jiaqi are the co-first authors. Associate Professor Laurent Kneip is the corresponding author.

Link to the paper: https://ieeexplore.ieee.org/abstract/document/9809788

Generally, the sensor is fixed at a certain position on a robot. Knowledge of the positional relationship between the sensor and the camera is required for researchers to use the data returned by the sensor, especially when there are multiple sensors on a robot. Knowledge of the relative positions of each pair of sensors is also needed, without which there could arise difficulties in the spatiotemporal calibration of the sensors. In order to achieve rapid calibration of different types of sensors, a general sensor calibration method called Multical was proposed by two labs of the STAR center, MPL and Mobile Autonomous Robotic Systems Lab (MARSL). The method makes use of multiple calibration boards to estimate initial guesses of spatial transformations between the sensors, freeing the user from having to estimate initial guesses. The proposed calibration approach was applied to both simulated and real-world experiments, and the results demonstrated a high fidelity of the proposed method. The results were published in an article entitled “Multical: Spatiotemporal Calibration for Multiple IMUs, Cameras and LiDARs” in IROS. Associate Professor Laurent Kneip and Associate Professor Sören Schwertfeger are the corresponding authors.

Link to the paper: https://ieeexplore.ieee.org/abstract/document/9982031

Advanced micro-nano control technology

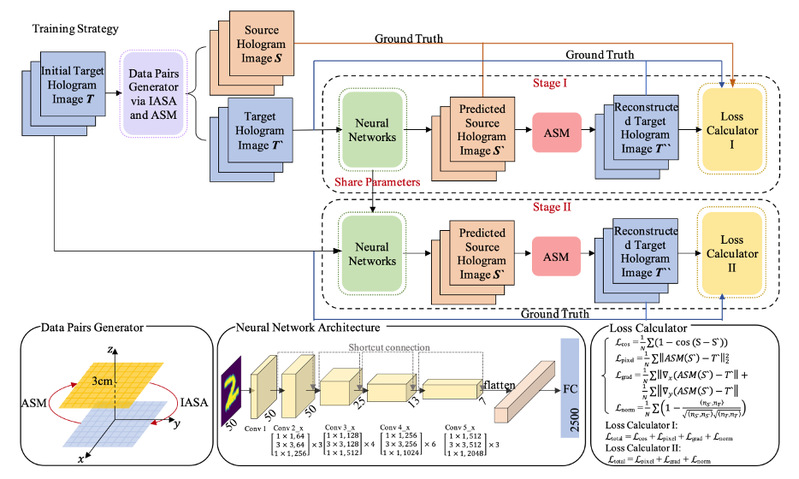

Micro-nano operation of non-contact robot is a new type of robot precision operation. Compared with the traditional gripper for contact precision operation, it extends the operable target scale to the micro-nano level, making operations of biological macromolecules and subcellular structures possible. The researchers of the Advanced Micro-Nano Robot Lab in STAR center used holographic acoustic field reconstruction with a phased transducer array (PTA) and a physics-based deep learning framework. The related work was published in an article entitled “Real-time Acoustic Holography with Physics-based Deep Learning for Acoustic Robot Manipulation” in IROS. Assistant Professor Liu Song is one of the corresponding authors.

Link to the paper: https://ieeexplore.ieee.org/abstract/document/9981395

沪公网安备 31011502006855号

沪公网安备 31011502006855号