In 2022 ShanghaiTech Automation and Robotics Center (STAR Center) of ShanghaiTech’s School of Information Science and Technology has accomplished a great success – professors and students presented eight papers at the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), which is one of the leading conferences in Robotics. Some of these papers are published with IROS and others with the prestigious IEEE Robotics and Automation Letters (RA-L).

Advanced Sensing

Humans mainly rely on the eyes to obtain various information from the outside world, while for robots, various sensors are used to respond to specific tasks and environments. Obtaining external information through sensors is the most basic and important part of robotics and automation.

The sensor platform

Event cameras have recently gained in popularity as they hold strong potential to complement regular cameras in situations of high dynamics or challenging illumination. The Mobile Perception Lab’s contribution is the first complete set of benchmark datasets captured with a multi-sensor setup containing an event-based stereo camera, a regular stereo camera, multiple depth sensors, and an inertial measurement unit. All sequences come with ground truth data captured by highly accurate external reference devices such as a motion capture system. Individual sequences include both small and large-scale environments, and cover the specific challenges targeted by dynamic vision sensors. This paper titled “VECtor: A Versatile Event-Centric Benchmark for Multi-Sensor SLAM” was accepted by RA-L, shown at IROS Conference. Gao Ling, a 2019 doctoral student of SIST, Liang Yuxuan, a visiting student to the MPL Laboratory, and Yang Jiaqi, a 2022 graduate SIST student, are the co-first authors of the paper, and Professor Laurent Kneip is the corresponding author.

Link: https://ieeexplore.ieee.org/abstract/document/9809788

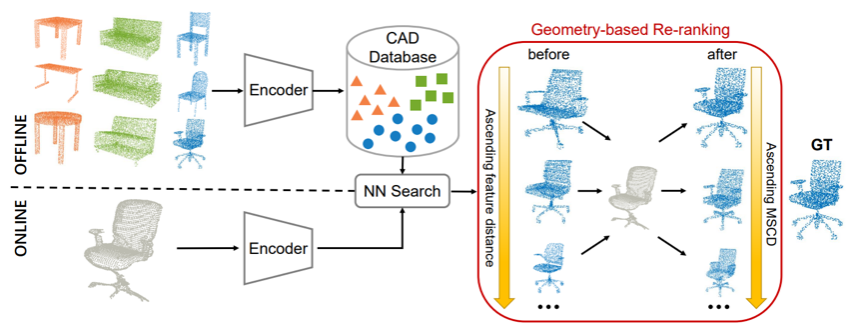

RGBD camera, also known as depth camera, will have one more depth information compared to the color information only returned by a normal camera. And with that depth information, we can do much more. in order to recover detailed object shape geometries for RGBD scans, MPL lab presents a new solution to the fine-grained retrieval of clean CAD models from a large-scale database. Their approach first leverages the discriminative power of learned representations to distinguish between different categories of models and then uses a novel robust point set distance metric to re-rank the CAD neighborhood, enabling fine-grained retrieval in a large shape database. The related work was accepted by IROS with the title 'Accurate Instance-Level CAD Model Retrieval in a Large-Scale Database'. Wei Jiaxin, a 2020 graduate student of the School of Information Sceince and Technology, is thefirst author. Hu Lan, a 2022 doctoral graduate, and Wang Chenyu, a 2022 undergraduate student, participated in the research project. Laurent Kneip is the corresponding author.

https://arxiv.org/abs/2207.01339

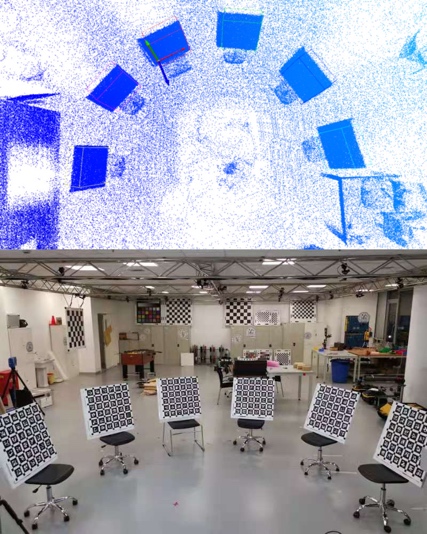

The pipeline

Generally, various sensors are fixed at a certain position on the robot. In order to use the data returned by the sensor, we need to understand the positional relationship between the sensor and the camera. Especially when there are multiple sensors on a robot, we also need to know each pair of sensors. The relative positional relationship between sensors, and different types of sensors bring more difficulty to the calibration problem. In order to achieve rapid calibration of different types of sensors, MPL lab and Mobile Autonomous Robotic Systems Lab work together, presents a general sensor calibration method, named Multical. It makes use of multiple calibration boards to estimate initial guesses of spatial transformations between the sensors, freeing the user from giving initial guesses. The proposed calibration approach was applied to both simulated and real-world experiments, and the results demonstrate the high fidelity of the proposed method. The related work was accepted by IROS with the title “Multical: Spatiotemporal Calibration for Multiple IMUs, Cameras and LiDARs”. Xiangyang Zhi, an SIST masters alumni and Jiawei Hou, an SIST PhD candidate Hou, are the co-first authors. Yiren Lu, a SIST bachelor alumni is a co-author as well as Professor Laurent Kneip. Professor Sören Schwertfeger is the corresponding author.

3D scan and image of the calibration boards

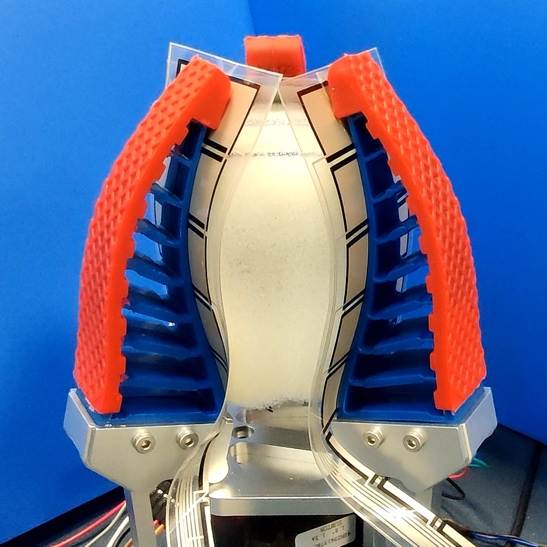

In addition to common visual sensors, there are many other types of sensors, such as tactile sensors, which can be used to obtain various information that is blocked by vision or cannot be obtained through vision. Living Machines Lab represent an algorithm that can detect the size and shape of hard inclusions hidden in soft three-dimensional objects by using a soft gripper with touch sensors, through a classification framework that merges the Bayes method directly into Neural Network architectures. This is useful in man applications, for example for robotic medical diagnosis in soft tissue. The experiments show that this new algorithm is more efficient than the previous approach and still able to achieve higher recognition accuracy than general deterministic CNNs. The related work was accepted by IROS with the title “Variable Stiffness Object Recognition with Bayesian Convolutional Neural Network on a Soft Gripper”. The SIST graduate student Jinyue Cao is the first author and Jingyi Huang, also an SIST graduate student, takes part in this project and Professor Andre Rosendo is the corresponding author.

Soft gripper with object

Advanced Locomotion

Directing the body to achieve various movements is a simple matter for humans, but it is a difficult task for robots of various shapes. It is also an important part of robot research to effectively drive various actions of the robot and overcome obstacles.

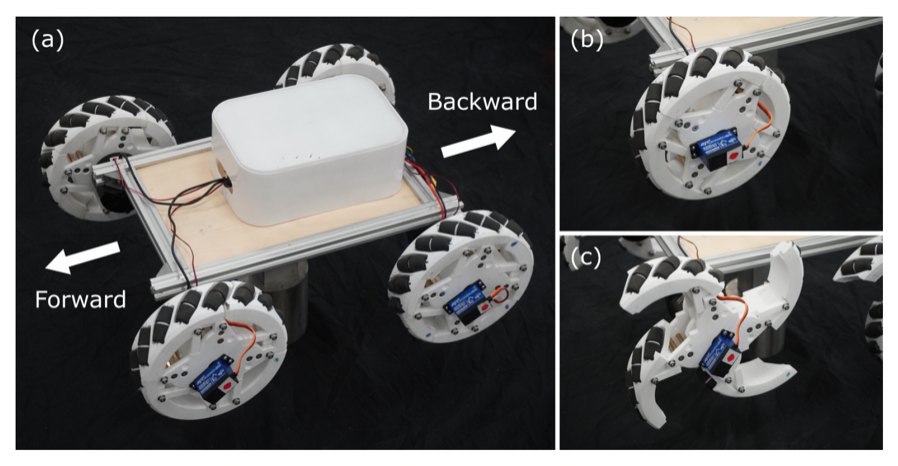

The OmniWheg wheel-leg robot in action

The use of wheels as a mobile tool is the most common robot design, but the fixed shape of the wheels will be limited in many scenarios. For example, when facing the most common obstacles such as stairs and steps in the city, it is impossible to move forward. LiMa Lab presents an omnidirectional transformable wheel-leg robot called OmniWheg. The novel mechanism allows the robot to transform between omni-wheeled and legged modes. The robot can move in all directions and efficiently adjust the relative position of its wheels, and it can overcome common obstacles, such as stairs and steps. The related work was accepted by IROS with the title “OmniWheg: An Omnidirectional Wheel-Leg Transformable Robot”. Ruixiang Cao is the first author, Jun Gu, Chen Yu takes part in this project and Professor Andre Rosendo is the corresponding author.

https://arxiv.org/abs/2203.02118v1

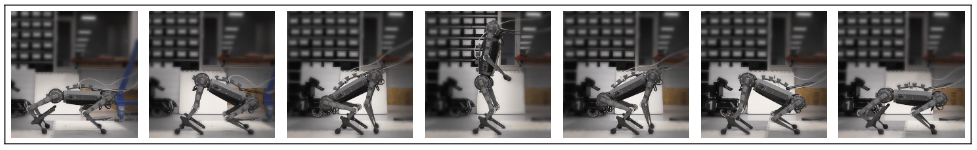

Common legged robots are divided into bipeds and quadrupeds. Biped robots are more flexible and fast, while quadruped robots are more stable and can cope with more complex environments. Both types of robots have advantages and disadvantages. And if the robot can have the advantages of both at the same time, it will be of great help to the direction of motion control. LiMa Lab proposes a multi-modal locomotion framework that can enable a legged robot to switch between quadruped mode and bipedal mode, using special mechanics and a novel class of reinforcement learning algorithms. They combines the good stability of quadruped robots with the higher level of flexibility of bipedal robots by enabling the robot dog to stand up and use two of its legs as hands. The related work was accepted by IROS with the title “Multi-Modal Legged Locomotion Framework with Automated Residual Reinforcement Learning”. Chen Yu is the first author and Professor Andre Rosendo is the corresponding author.See https://chenaah.github.io/multimodal/ for videos.

https://arxiv.org/abs/2202.12033

In addition to obstacles such as steps that are shorter than robots, sometimes we also encounter situations where we need to climb obstacles higher than the robot itself, especially for search and rescue robots in disasters. Often the circumstances we are dealing with are not ideal, which is why solving these kinds of problems makes more sense. LiMa Lab is using the same robot as above. The paper uses a learning-based Constrained Contextual Bayesian Optimization (CoCoBo) algorithm that is using Gaussian processes to train the robot to climb obstacles higher than itself. The related work was accepted by RA-L with the title “Learning to Climb: Constrained Contextual Bayesian Optimization on a Multi-Modal Legged Robot”. Chen Yu is the first author, Jinyue Cao takes part in this project and Professor Andre Rosendo is the corresponding author.

Experiment videos are available at https://chenaah.github.io/coco/.

https://ieeexplore.ieee.org/document/9835039

Robot climbing obstacle

Advanced micro-nano control technology

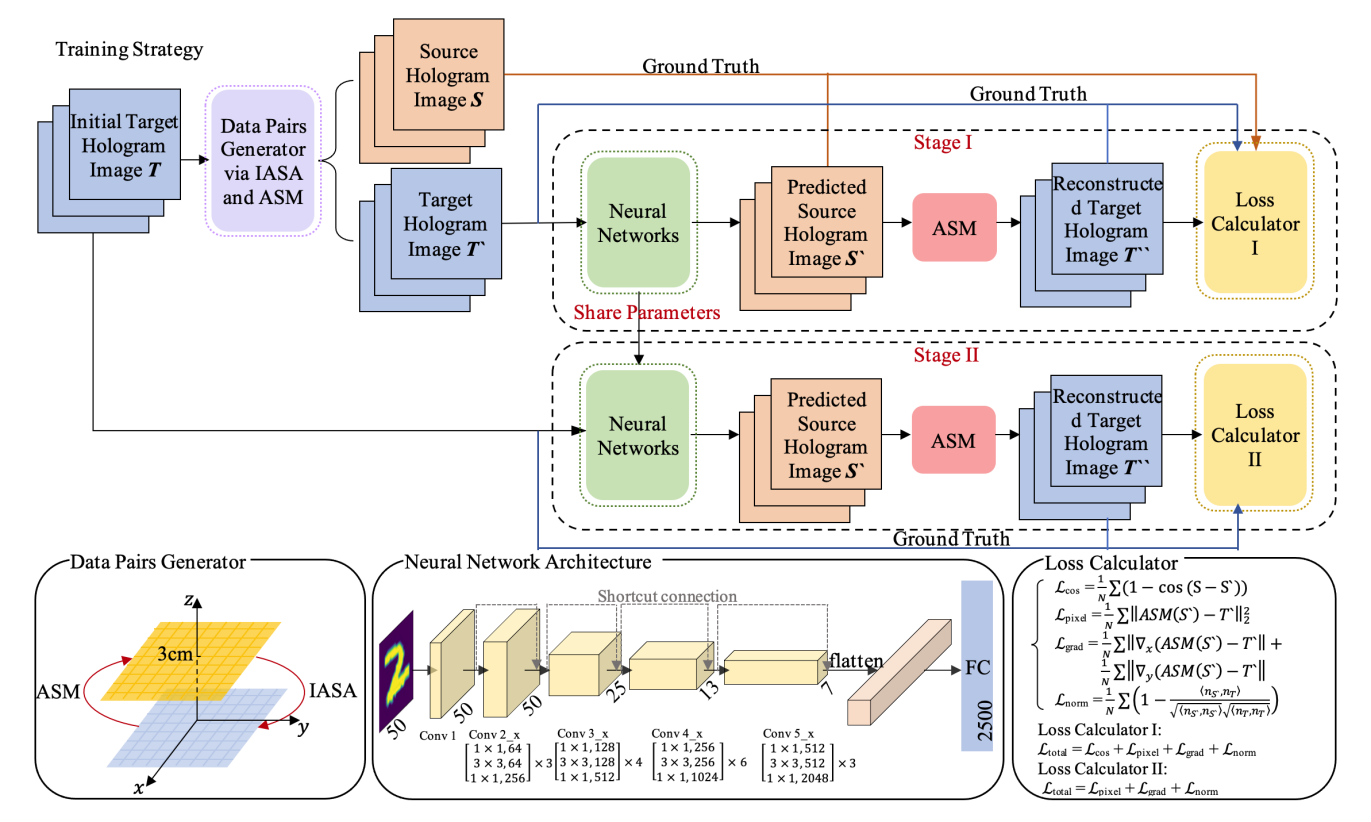

The non-contact robot micro-nano operation is a new type of robot precision operation. Compared with the traditional gripper for contact precision operation, it extends the operable target scale to the micro-nano level, making biological macromolecules and subcellular structures possible. operation becomes possible. Advanced Micro-Nano Robot Lab works on acoustic holography, which is a newly emerging and promising technique to dynamically reconstruct arbitrary desired holographic acoustic field in 3D space for contactless robotic manipulation. The method is using holographic acoustic field reconstruction with a phased transducer array and a physics-based deep learning framework. The related work was accepted by IROS with the title “Real-time Acoustic Holography with Physics-based Deep Learning for Acoustic Robot Manipulation”. Chengxi Zhong is the first author, Zhenhuan Sun, Kunyong Lv take part in this project and Professor Yao Guo, and Professor Song Liu are the corresponding authors.

沪公网安备 31011502006855号

沪公网安备 31011502006855号