Visual and Data Intelligence Center (VDI Center) of SIST has published a top journal paper, 'Neural Opacity Point Cloud', in IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE TPAMI) recently. The journal has a 2019 impact factor of 17.861 and mainly contains original scientific research results in the fields of artificial intelligence, pattern recognition, computer vision and machine learning.

This work introduces a neural renderer which can render complex scene and generate images at arbitrary viewpoints. It is a new deep learning application in the field where computer vision and computer graphics are mixed. It can greatly improve the quality and speed of rendering and also inspire future technology of rendering. In this article, graduate student Cen Wang and Ph.D. student Minye Wu proposed a neural opacity point cloud (NOPC) rendering method to achieve high-quality rendering of fuzzy objects at arbitrary viewpoints. This method can generate photo-realistic rendering results even when using low-quality 3D point clouds.

Traditional technology, Image-Based Opacity Hull (IBOH), can cause artifacts and ghosting due to insufficient ray sampling. This problem can be alleviated by using high-quality geometry. But for fuzzy objects, obtaining an accurate geometry is still a huge challenge. The Fuzzy object contains thousands of hair fibers. Because the fibers are extremely thin and irregularly occluded, they exhibit a strong view-dependent opacity which is difficult to model on geometry and appearances.

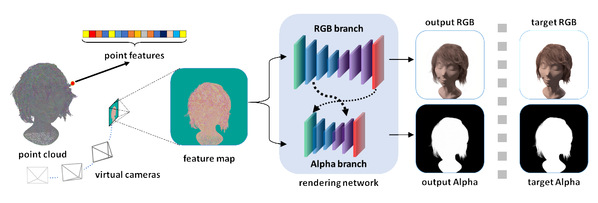

The proposed rendering method combines image-based rendering (IBR) with neural rendering. The pipeline takes the low-quality point cloud of the target object as input, and using captured images to render a photo-realistic fuzzy object and its alpha map. This work also proposed a capture system for collecting data from the real world.

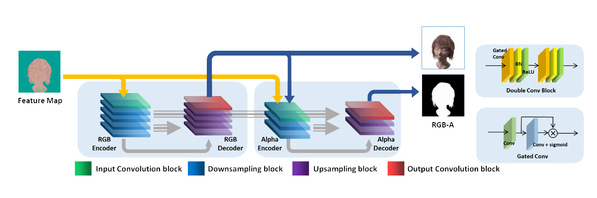

Specifically, NOPC consists of two modules: The first module aims to learn 3D point feature extraction. This point feature encodes the local geometric and appearance information around the 3D point. We can obtain the feature map by projecting all 3D points and their features to the novel view. The second module uses a convolutional neural network to decode the RGB image and alpha map from the feature map. The convolutional neural network is based on the U-net structure. In this network, we apply gated convolution instead of conventional convolution to handle uncompleted 3D point cloud. At the same time, based on the original hierarchical structure of U-net, a new alpha prediction branch is extended from the RGB branch. This alpha branch effectively enhances the performance of the entire network.

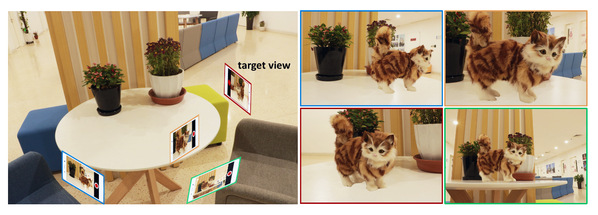

NOPC has a wide range of application scenarios. It can be used in virtual reality (VR) and augmented reality (AR) area, and realistically display objects with transparency, which is not easy to model (such as human hair, fuzzy toys, etc.), in a virtual 3D world. For example, you can also take photos with idols in AR.

This work was done at The School of Information Science and Technology, Shanghai University. Master student Cen Wang and Ph.D. student Minye Wu are the first and second authors respectively. Professor Yu Jingyi is the corresponding author. This work was supported by the National Key Research and Development Program, National Natural Science Foundation of China, STCSM, and SHMEC.

Our pipeline

The structure of rendering network

A demonstration of application in AR.

NOPC can put a virtual cat in the real scene and generate photo-realistic images

沪公网安备 31011502006855号

沪公网安备 31011502006855号