Recently, International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI) 2019 published acceptance notification and two papers from the groups of Prof. Gao Fei and Prof. Gao Shenghua have been accepted. MICCAI is top conference in the field comprising Medical Image Computing (MIC) and Computer Assisted Intervention(CAI).The MIC involves artificial intelligence, machine learning, image segmentation and registration, computer-aided diagnosis, clinical and biomedical applications. The CAIinvolves tracking and navigation, interventional imaging, smart medical surgical robots.

It is the first time for SIST to submit papers to MICCAI. It is said that the submissions for this year hit a new high, with a 70% increase compared with last year’s and about 30% high quality papers from all submissions could be achieved.

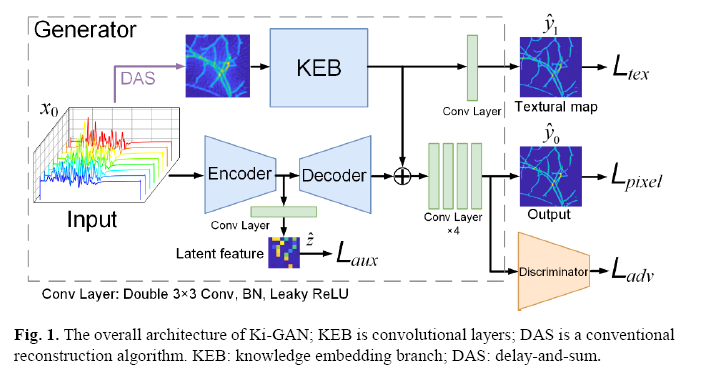

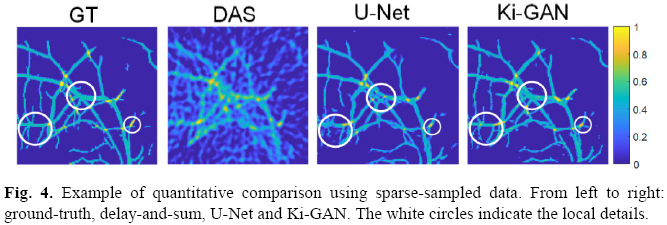

SIST Professor Gao Fei and Prof. Gao Shenghua’s groups co-authored the paper entitled Ki-GAN: Knowledge Infusion Generative Adversarial Network for Photoacoustic Image Reconstruction in vivo. In their paper, it proposed to infuse the classical signal processing and certified knowledge into the deep learning for photoacoustic (PA) imaging reconstruction, distinguishing from existing non-iterative algorithms (direct and post-processing). This method showed better image reconstruction performance in cases of both full-sampled data and sparse-sampled data compared with state-of-the-art methods.Prof. Gao Fei’s group Lan Hengrong and Prof. Gao Shenghua’s group Zhou Kang are the first authors of the paper. Prof. Gao Fei and Prof. Gao Shenghua are co-corresponding authors.

Figure 1. The reconstruction architecture for PA imaging(Ki-GAN)

Figure. 2. The reconstruction results of spare-sampled data.

Figure 3. The reconstruction result of rat thigh.

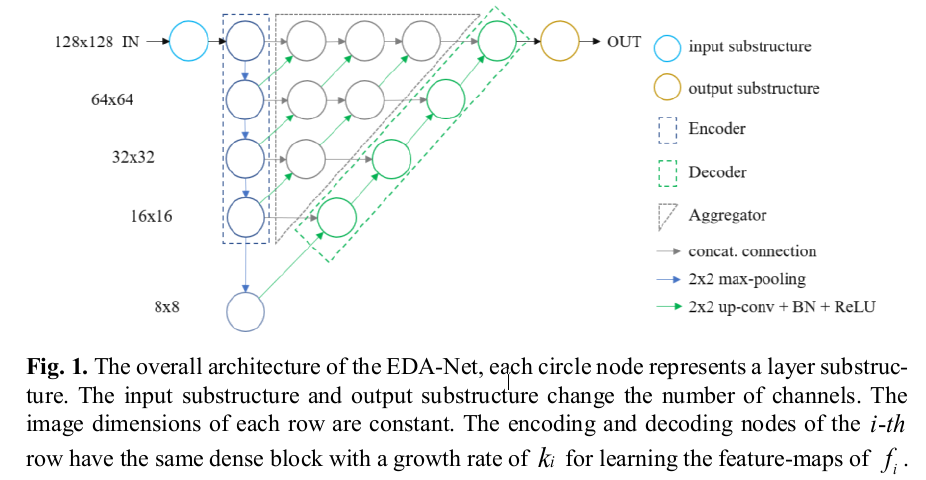

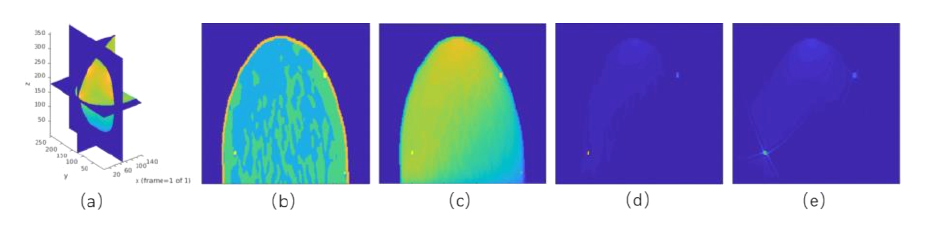

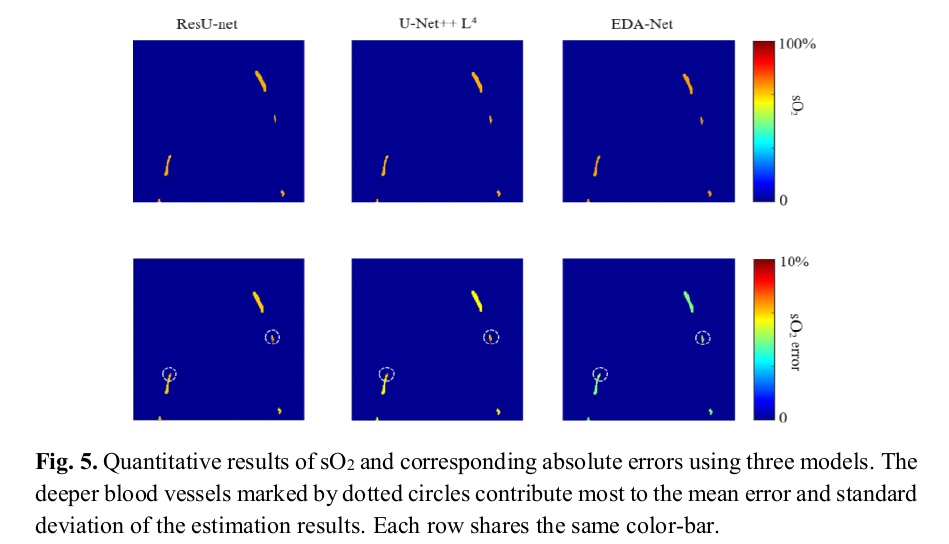

Prof. Gao Fei’s group also published the paper EDA-Net: Dense Aggregation of Deep and Shallow Information Achieves Quantitative Photoacoustic Blood Oxygenation Imaging Deep in Human Breast. In this paper, they proposed to use encoders, decoders, and aggregators to obtain rich feature representations based on the deep aggregation. The dense aggregate information can help to extract correlation information between different wavelengths from multi-wavelength PA images, and the quantitative distribution of oxygen saturation (sO2) is accurately inferred.It is the first time to use DL to quantify the oxygen saturation of human breasts, and we were also the first to explore the impact of 2-21 different wavelength combinations on quantitative results, which can provide meaningful guidance for the development of QPAI systems.Yang Changchun, a first year graduate student from the group of Prof. Gao Fei, is the first author of this paper, and Prof. Gao Fei is the corresponding author.

Figure 4. The overall architecture of proposed method(EDA-Net)

Figure 5. Theillustrate of patient 2.

Figure 6. Quantitative results of sO2 and corresponding absolution errors.

These papers are all accomplished in ShanghaiTech University, which were funded by ShanghaiTech University Startup Foundation, Natural Science Foundation of Shanghai, and National Natural Science Foundation of China.

沪公网安备 31011502006855号

沪公网安备 31011502006855号