Automation and Robotics Center (STAR Center) https://star-center.shanghaitech.edu.cn/ of School of Information Science and Technology http://sist.shanghaitech.edu.cn/,ShanghaiTech University got five papers, which related to the fields of visual Simultaneous Localization and Mapping (SLAM), robot Manipulation and visual perception, accepted at 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023). IROS is a large and impactful forum for the international robotics research community to explore the frontier of science and technology in intelligent robots and smart machines, emphasizing future directions and the latest approaches, designs, and outcomes.

Visual SLAM

Image Registration Method

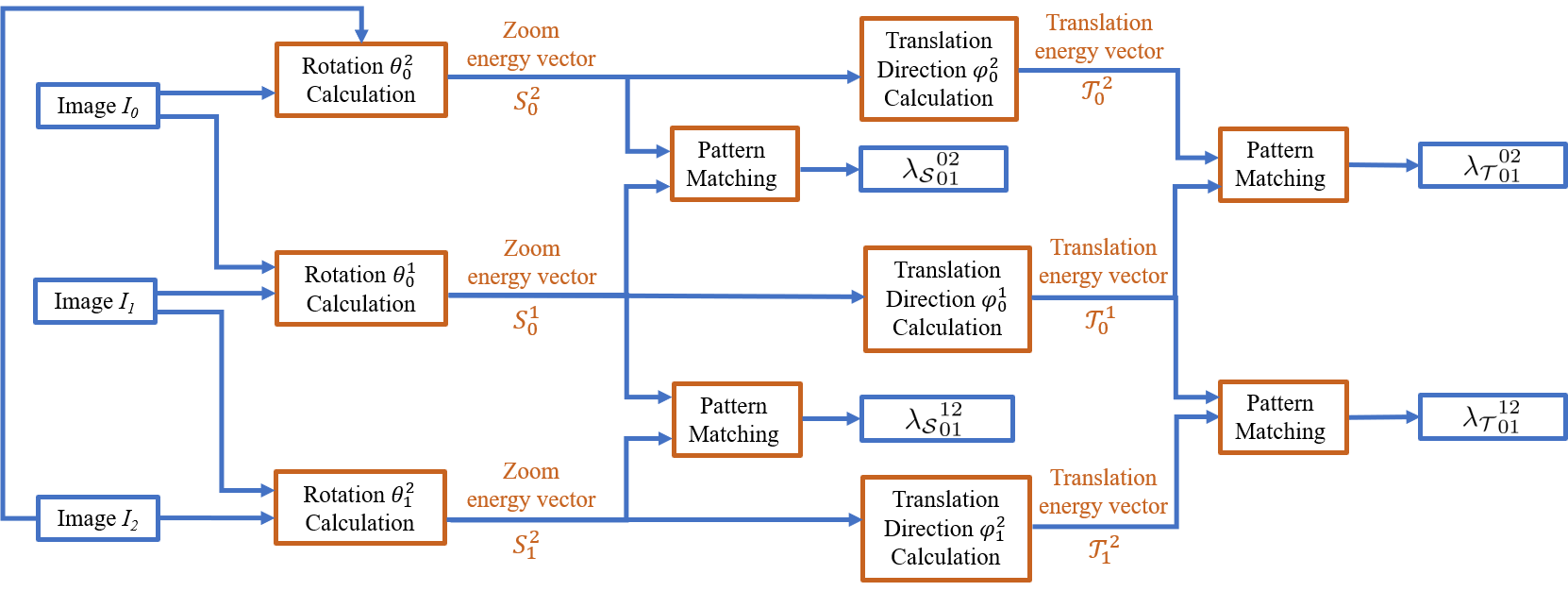

With the continuous increase of robot applications, stable and efficient visual odometry (VO) algorithms become more and more important. Extended Fourier Mellin Transform (eFMT), based on the Fourier Mellin Transform (FMT) algorithm, is an image registration method that can be applied to top-down cameras, such as aerial and underwater vehicles. Based on this, Researchers develop an optimized eFMT algorithm that improves some aspects of the method and combines it with back-end optimization for small loops of three consecutive frames. To this end, we investigate the extraction of uncertainty information from eFMT registration, related objective functions, and graph-based optimization. Finally, we design a series of experiments to investigate the properties of our method and compare it with other VO and SLAM (Simultaneous Localization and Mapping) algorithms. The results demonstrate the superior accuracy and speed of our o-eFMT method. The related work was accepted by IROS with the title “Optimizing the extended Fourier Mellin Transformation Algorithm”. JiangWenqing, a 2020 graduate student of SIST, and LiChengqian, a 2021 master’s student of SIST, are the co-first authors, CaoJinyue, a 2020 graduate student of SIST, takes part in this project. Professor Sören Schwertfeger is the corresponding author.

Link to the paper: https://arxiv.org/abs/2307.10015

Fig. o-eFMT image registration method flowchart

Pose graph optimization

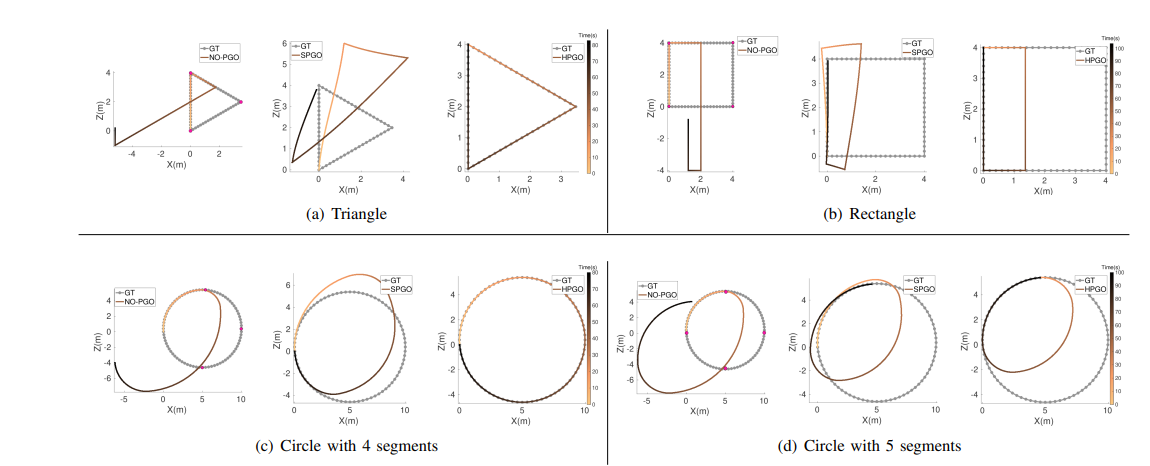

Simultaneous Localization and Mapping (SLAM) plays a crucial role in various fields such as robotics, augmented reality, and autonomous driving. Compared to multi-sensor SLAM, monocular visual SLAM is often more attractive due to the advantages of lower cost, lighter weight, and lower energy consumption of cameras. However, monocular visual SLAM suffers from scale ambiguity, as scale determination relies on the initialization of the system in the scene. This leads to inconsistent scales in the trajectories and maps obtained from different initializations in the SLAM system, known as scale-jump.

Moreover, within the same trajectory, the scale gradually becomes inconsistent over time, which is referred to as scale-drift. Scale-Drift Aware Pose Graph Optimization (SPGO) is a framework proposed by Strasdat in 2010 to fix scale drift in pose graph optimization. Building upon this, the research group led by Laurent Kneip introduced an improved graph optimization framework that can simultaneously fix both scale-drift and scale-jump, refered as Hybrid pose graph optimization (HPGO). This algorithm enhances the objective function and conducts feasibility analysis.

The optimization framework has been integrated into the ORBSLAM system, showing promising results for real-world scenarios. The research has been published under the title 'Scale jump-aware pose graph relaxation for monocular SLAM with re-initializations,' with Yuan Runze, a 2021 master's student of SIST, listed as the first author, and Laurent Kneip as the corresponding author.

Link to the paper: https://arxiv.org/abs/2307.12326

Demo and code: https://github.com/peteryuan123/HPGO-ORBSLAM3

Fig. Performance of HPGO in simulated datasets

Robot Manipulation

Micro-nano Level Robot.

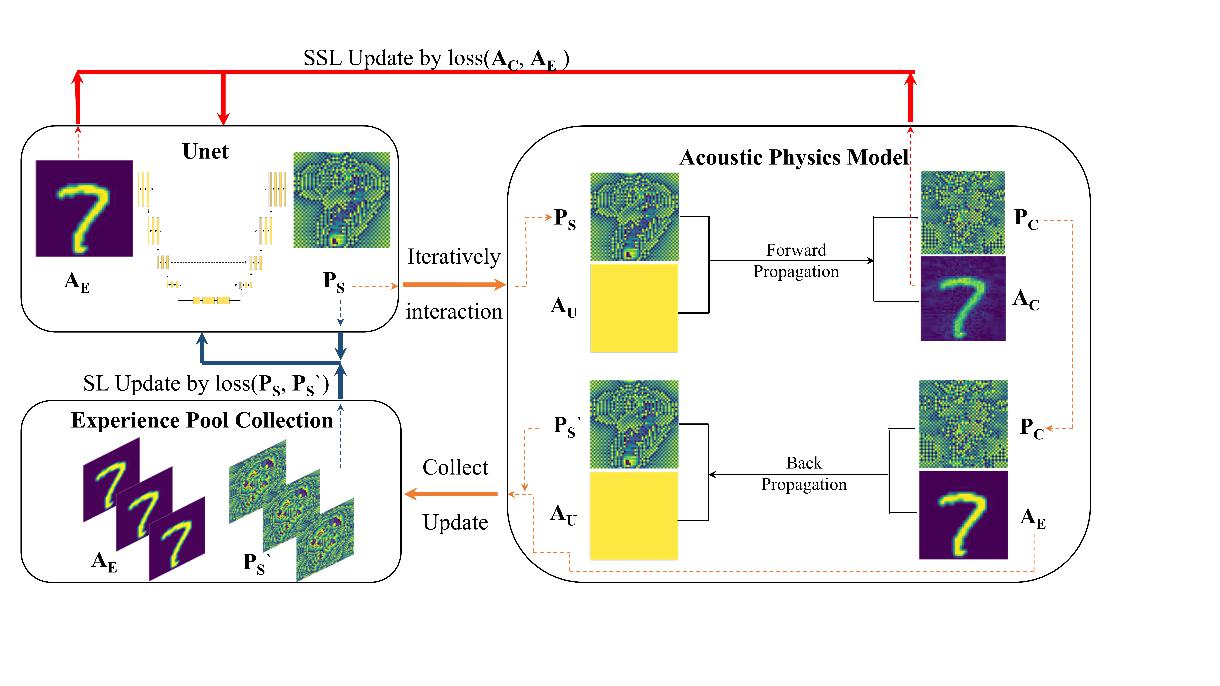

The non-contact robot micro-nano operation is a new type of robot precision operation. Compared with the traditional gripper for contact precision operation, it extends the operable target scale to the micro-nano level, making biological macromolecules and subcellular structures possible. operation becomes possible. Advanced Micro-Nano Robot Lab works on acoustic holography, which is a newly emerging and promising technique to dynamically reconstruct arbitrary desired holographic acoustic field in 3D space for contactless robotic manipulation. The method is using holographic acoustic field reconstruction with a phased transducer array and a physics-based deep learning framework. The related work was accepted by IROS with the title “Ultrafast Acoustic Holography with Physics-Reinforced Self-Supervised Learning for Precise Robotic Manipulation”. Qingyi Lu, a 2019 undergraduate student of SIST, and Chengxi Zhong, a 2021 master’s student of SIST, are the co-first authors, Qing Liu, a 2020 master’s student of SIST, and Teng Li, an assistant researcher of SIST, take part in this project and Professor Hu Su, and Professor Song Liu are the corresponding authors.

Fig. Physics-reinforced self-supervised learning model

Fish-like Robots

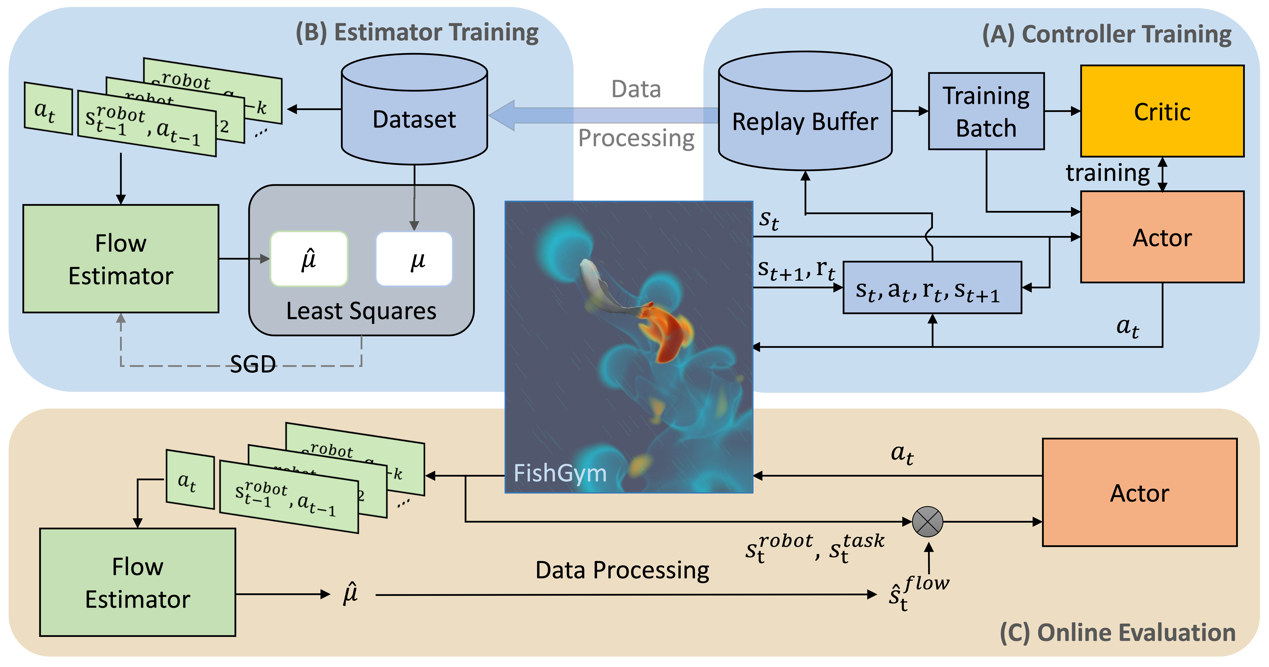

Fish-like robots have strong maneuverability, propulsion efficiency, and deceptive visual appearance. In order to improve the performance of robotic fish in various extreme environments, further research on its motion control in complex flow fields is of great significance. This article proposes a new learning-based control framework that can autonomously explore effective control strategies, enabling it to perform motion control tasks in non-quiescent and unknown background flow fields. In this paper, the Deep Reinforcement Learning (DRL) algorithm is combined with the online estimater, so that the fish robot can estimate the flow velocity and direction in the flow field environment without using the Artificial LateralLine System (ALLS), and use the flow information to complete the Approaching Target and Stay (ATS) control and Path-Following Control (PFC). We use a high-performance Computational Fluid Dynamics (CFD) simulator called FishGym as the simulation experimental platform, which can provide nearly real-time fluid simulation data, greatly accelerating the training process. The simulation results show that through the proposed learning framework, the robot has successfully obtained a swimming strategy that can be used to adapt to different background flows and tasks. In addition, we have also observed some adaptive behaviors of robots, such as rheotaxis, which is similar to fish in nature, allowing us to gain a deeper understanding of the mechanisms by which fish adapt to complex environments. The related work was accepted by IROS 2023 with the title 'Exploring Learning-based Control Policy for Fish-like Robots in Altered Background Flows'. LinXiaozhu, a 2021 master’s student of SIST, is the first author, Professor WangYang is the corresponding author.

Fig. Proposed Framework

Visual Perception

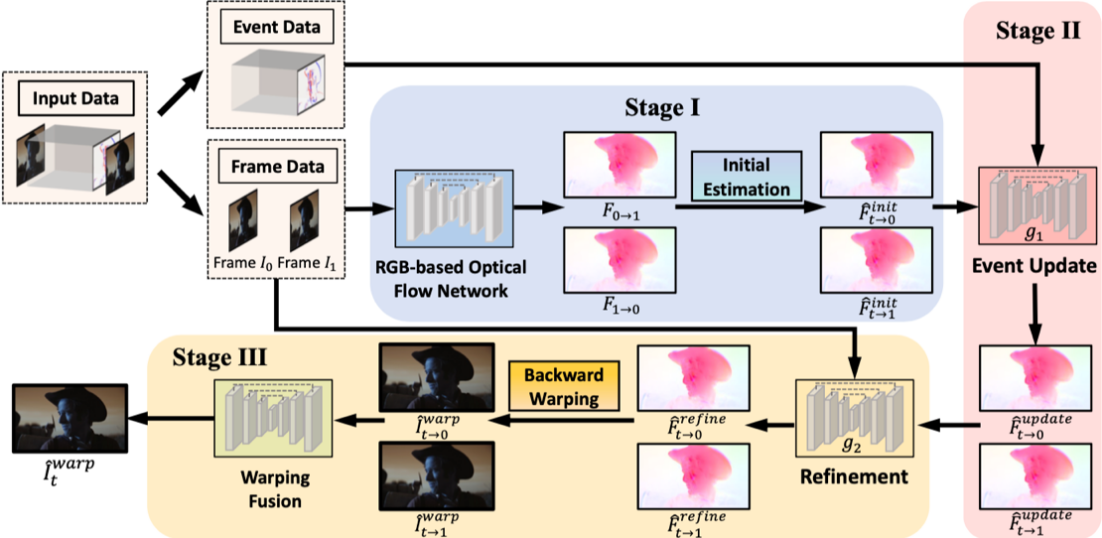

As a novel sensor, event cameras has the characteristics of high dynamic range and high temporal resolution, thus, it provide rich complementary information for video frame interpolation.The existing research does not make full use of the characteristics of event information. In order to improve the authenticity of the video frame insertion effect, we revisit the intrinsic characteristics of event streams, and propose two novel designs combining synthesis-based and warping-based module.

In light of the quasi-continuous nature of the time signals provided by event cameras, we design a Proxy-guided Synthesis strategy to incrementally synthesize intermediate frames and effectively combine both local and global temporal information. Meanwhile, given that event cameras only encode intensity changes and polarity rather than color intensities, estimating optical flow from events is arguably more difficult than from RGB information. We propose an Event-guided Recurrent Warping strategy, where we use events to calibrate optical flow estimates from RGB images. Our method demonstrates more reliable and realistic intermediate frame results compared to previous methods on both synthetic and real-world datasets.

Fig. Architecture of the Event-guided Recurrent Warping module

This work was accepted by IROS 2023 with the title “Revisiting Event-based Video Frame Interpolation”. ChenJiaben, a 2018 undergraduate student of SIST, is the first author, ZhuYichen, a 2018 undergraduate student of SIST, is the second author, LianDongze who is a 2018 Ph.D. student, YangJiaqi who is a 2018 undergraduate student, WangYifu who is a post Ph.D. fellow, LiuXinhang who is a 2018 undergraduate student and QianShenhan who is a 2019 graduate student take part in this project. Professor Laurent Kneip supervises this work. Professor GaoShenghua is the corresponding author.

Link to the paper: https://arxiv.org/abs/2307.12558

Web to the project: https://jiabenchen.github.io/revisit_event

沪公网安备 31011502006855号

沪公网安备 31011502006855号